Stitching

Stitching Architecture

One of the key difficulties in a federation is coordinating experiments which span multiple federates. We call this the stitching problem. The difficulty with stitching is that depending on what kind of connection is used by the federates, multiple parties must agree on configuration or resource reservations before they have all of the information about the final resource allocation.

Types of Stitching

The simplest form of stitching consists of federates each having access to a common network with no particular tunneling arrangement. If two component managers both have access to the commodity Internet, for instance, then the resources on those component managers can be allocated independently without direct coordination. For this to work, the client need only be able to determine whether or not a particular node has access to the network. This information can be provided in the advertisement via a special node which represents the commodity Internet (or some other network such as Internet 2), and a link from particular PCs to that special node.

A more complicated form of stitching is the creation of tunnels. These are links created as an overlay on top of an existing network. From a coordination perspective, one key aspect of tunnels is that they only require configuration at their endpoints and therefore only require coordination between pairs of component managers which are setting them up. The intervening network requires no special configuration. In a tunnel, the coordination usually consists of exchanging information about the endpoints. In a GRE tunnel, this may just be the public IP address of the other side; for VPNs, this may involve key exchange as well.

The limitation this imposes is that one component manager may be required to create a sliver before a request has been made of the other side. Our current implementation requires both slivers to exist and then the tunnel is created during update requests. This is a rather cumbersome workaround and we plan to implement a more graceful solution similar to the multi-party VLAN negotiation described below.

The most general and difficult form of stitching consists of links or LANs which can span any number of Component Managers simultaneously. Concretely, we are most concerned with Ethernet VLANs which can join more than two endpoints and which can span zero or more backbone Component Managers which do not contain any endpoint nodes. In addition, unless VLAN translation is supported in backbone routers, a single VLAN number must be agreed upon and reserved by all Component Managers involved.

Stitching Strategies

There are therefore three important problems to be solved here. First, negotiation must be done with incomplete information about the topology when some participants have not yet been contacted by the client. Second, the negotiation can span more than two component managers. Third, the negotiation involves a limited resource that must be agreed to and reserved by all parties rather than merely exchanging local parameters. These problems must all be solved in the context of federation where each party remains autonomous with local policies determined by different administrators.

We have designed a solution to these problems, and are currently working on implementing this design. In our general API model, we have three different phases which correspond to instantiating a sliver. The client calls GetTicket to reserve a set of resources to a slice, then RedeemTicket to acquire those resources, and finally StartSliver to set up those resources so they are ready for use. Some aspects of starting a sliver, such as physically imaging and booting a node, may take a long time. This means that StartSliver runs asynchronously and the user may poll using SliverStatus to determine when their sliver has completed setup.

After a ticket is created during GetTicket, it represents a reservation of resources. Therefore the selection and reservation of VLAN numbers or other allocatable resources must occur during GetTicket. Since GetTicket is a synchronous operation, this allocation must occur or fail quickly and needs to include the cross-CM allocations for all VLANs in the topology which are mapped in some way onto the manager which is creating the initial ticket.

When communicating with multiple Component Managers, the user can call GetTicket on all of them before redeeming any tickets or they may choose to call GetTicket, RedeemTicket, and then StartSliver on one component manager before moving on to the next. The client is not required to run these requests in parallel. This means that VLANs need to be potentially be allocated before all of the component managers have received a GetTicket request.

We will therefore create an interface which will provide for cross-CM allocation of VLAN numbers without binding those VLANs to particular sets of switches or nodes. These will be reserved for a limited time based on the local policy of each CM and the allocation can be initiated by the first CM contacted by the client. When the client returns the ticket, the ticket lifetime will be constrained by the reservations made at other component managers for those VLANs.

Actually instantiating the VLANs requested by the client requires that the topology in both component managers to be bound so that the set of switches and their configuration options can be specified. This means that the instantiation of cross-CM VLANs must occur as part of the StartSliver process so that it can be done asynchronously to allow for waiting on other component managers to generate tickets and redeem them. Other non-allocation negotiation such as agreeing on the particular entry point of a VLAN can also be done as part of the StartSliver process.

This mechanism also provides locality. Since only the VLAN numbers associated with a given component manager are reserved by a ticket returned by that component manager, the client can incrementally create tickets at each component manager and retry a particular component manager if it fails rather than having to change the whole topology.

Given this basic framework, the exact negotiations need not be specified by the API and can evolve along with our federation. Currently, we have a single backbone Component Manager and therefore it would be easy to reserve certain VLANs to cross-CM topologies and allow the backbone to make the decision unilaterally within those reserved CMs. In the longer term, as the federation grows the negotiation process can become more complex to prevent a single point of failure without changing the overall structure.

Pairwise Stitching Algorithm

Here are thoughts on coordinating a VLAN reservation between CMs. This is a pairwise allocation approach. (This is not going to work in the case that more then one CM needs to coordinate the same VLAN tag.)

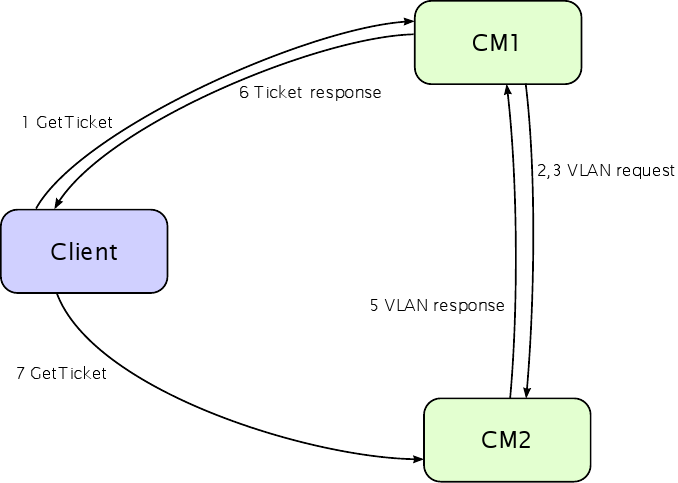

- CM1 receives a GetTicket request and notices from the rspec that there is a link that crosses to another CM, for which a common VLAN tag must arranged.

- CM1 calls over to CM2, passing the rspec. Note that 1) CM2 might not have received its GetTicket request, and 2) the rspec that CM1 sends might not be fully specified with respect to CM2's portion.

- CM1 passes CM2 a set of possible VLAN numbers. CM2 responds with the VLAN that would work for it, or an error if none of them will work. CM1 has reserved all numbers in the set locally, so it can be sure it can get them later when CM2 responds.

- If CM2 returns an error, CM1 can give up at this point, or it can send another set of VLAN numbers to CM2, to try again. The first set is released locally, and another set allocated.

- If instead CM2 finds an acceptable tag (one it can reserve locally), it returns that to CM1. CM1 releases all the other VLANs in the set. Both sides now have that VLAN tag reserved.

- CM1 generates the ticket and puts the VLAN tag into it. This avoids the problem of the client have to request a new copy of the manifest after StartSliver(). It also allows the CM to fork/return after the RedeemTicket() part of a CreateSliver() operation.

- CM2 gets the GetTicket request that corresponds to the rspec it got from CM1. It looks to see if there is a global reservation in the DB, and if so uses that tag.

Aside: what if CM1 and CM2 both get the GetTicket request at the same time, and make a request to the other side at the same time? Both sides will have a slice structure, and that slice will be locked. Both sides need to back off so that one side can proceed to become the master.

- If CM2 does not see the GetTicket() request in a reasonable amount of time, it will release the VLAN tag reservation.

Aside: What if the GetTicket() request comes through later? At this point CM1 is committed to a particular tag. CM2 will contact CM1 as above, but now CM1 has only a single choice. If CM2 can still locally reserve that tag, great. Otherwise the GetTicket() at CM2 will return a failure.

Emulab Database notes

Regarding the structure of a global VLAN reservations table: since there might not be an existing slice/experiment yet, the key to the table needs to be somewhat flexible. Something like:

CREATE TABLE `reserved_vlantags` ( `name` varchar(128) NOT NULL default '', `idx` int(10) unsigned NOT NULL auto_increment, `tag` smallint(5) NOT NULL default '0', `reserve_time` datetime default NULL, `locked` datetime default NULL, `state` varchar(32) NOT NULL default '', PRIMARY KEY (`name`,`idx`), ) ENGINE=MyISAM DEFAULT CHARSET=latin1;

- name is some arbitrary string, perhaps a slice URN combined with the linkname.

- idx is to support sets of reserved tags for a single name (as during negotiation).

- tag is tag

- reserve_time to support aging out stale reservations.

- locked is a lock, but not sure I need a row lock yet.

- state is a string to support the handshake described above, or other more complicated state machines if necessary.

Test Case

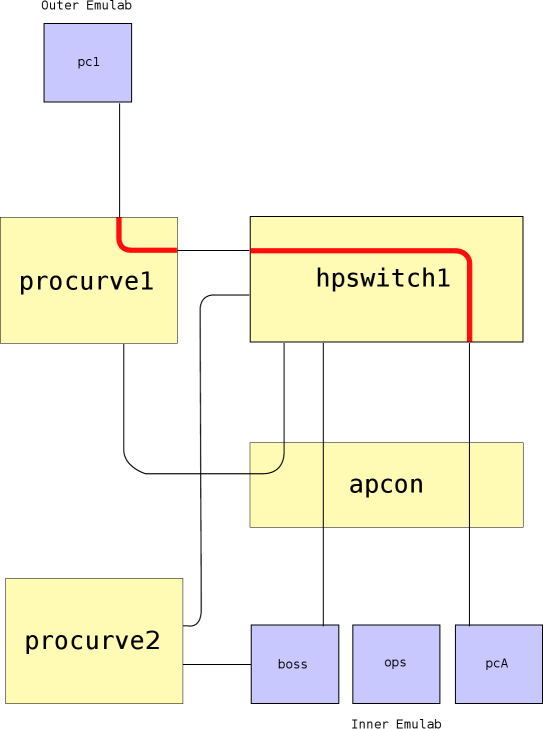

Here is the proposed test case for stitching between two CMs. Yellow boxes are switches, blue boxes are nodes, black lines are wires, and red lines are VLANs.